- Published on

Games 101 Note

- Authors

- Name

- Yue Zhang

- 01 Overview

- 02 Linear Algebra

- 03~04 Transformation

- $$ M_{\text{persp} \to \text{ortho}} \begin{bmatrix} x \ y \ z \ 1 \end{bmatrix}

- \begin{bmatrix} nx/z \ ny/z \ \text{unknown} \ 1 \end{bmatrix}

- $$ M_{\text{persp} \to \text{ortho}} \begin{bmatrix} x \ y \ n \ 1 \end{bmatrix}

- \begin{bmatrix} x \ y \ n \ 1 \end{bmatrix}

- $$ M_{\text{persp} \to \text{ortho}} \begin{bmatrix} 0 \ 0 \ f \ 1 \end{bmatrix}

- 05~06 Rasterization

- 07~09 Shading

- 10~12 Geometry

- 13~16 Ray Tracing

GAMES101:现代计算机图形学入门,是GAMES(Graphics And Mixed Environment Symposium)组织开设的一门线上课。讲师是闫令琪,现在是加州大学圣芭芭拉分校助理教授。我在去年九月末两周时间刷完了GAMES101(共22节课),并做了一波整理。虽然这两周经历了一些小麻烦,但是还是顺利做完了这份整理。

01 Overview

The course content mainly includes:

- Rasterization: Displaying geometric shapes in 3D space on the screen. Real-time rendering (30fps) is a significant challenge.

- Geometry Representation: How to represent curves, surfaces, and topological structures.

- Ray Tracing: Slow but realistic. Achieving real-time performance is a major challenge.

- Animation/Simulation: For example, when throwing a ball to the ground, how it bounces, compresses, and deforms.

02 Linear Algebra

Be confident, you know it all!

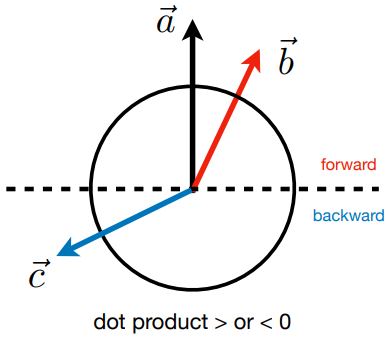

- Dot Product: Can determine front and back, as shown in the diagram below.

- Cross Product: It can determine left and right, as well as inside and outside, as shown in the diagram below.

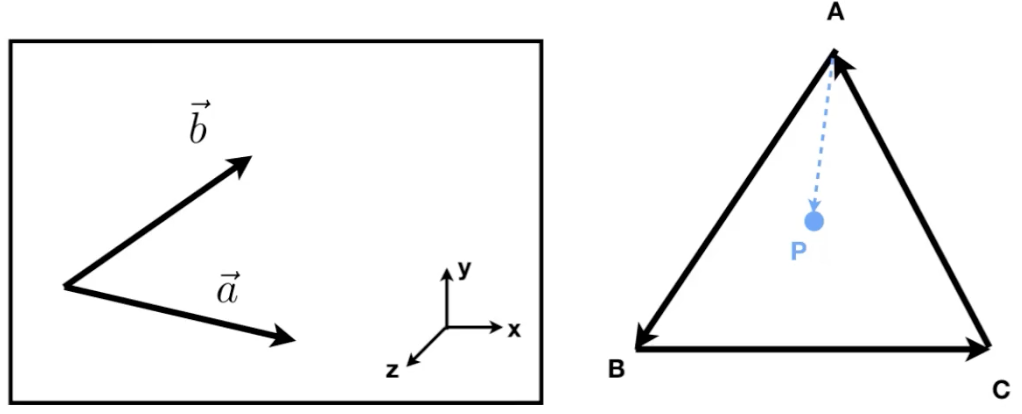

- Left Diagram: Determine left and right by checking the sign of the cross product result.

- Right Diagram: Determine whether a point is inside or outside a triangle by checking if it lies on the same side of all three edges.

03~04 Transformation

1. 2D Transformation

1.1 Affine Transformation

Affine transformation consists of linear transformation and translation.

The transformation formula is:

where the first term is a linear transformation, and the second term is translation.

The corresponding linear transformation matrix is:

Scaling (scale):

The reference position is the origin. The x-axis scales by a factor of α, and the y-axis scales by a factor of β.

Shear (shear):

The reference position is the origin. The vertical coordinates of all points remain unchanged, while the horizontal coordinates shift.

Rotation (rotate): A counterclockwise rotation by an angle θ around the origin. The rotation matrix is an orthogonal matrix, meaning: The rotation matrix derivation can be easily obtained using the transformations:

1.2 Homogenous Coordinates

Previously, we saw the affine transformation equation:

However, handling translation terms can be cumbersome. By introducing homogeneous coordinates, we can elegantly incorporate translations. The concept of homogeneous coordinates is to represent an -dimensional coordinate using an -dimensional vector.

For 2D transformations, we use 3D vectors to represent 2D coordinates (adding a -axis alongside and ):

- 2D point:

- 2D vector:

- Extended homogeneous form: where is equivalent to .

Regarding the -axis, a point's homogeneous coordinate typically has , while a vector has . This makes sense because adding a vector to a vector results in a vector, adding a point to a point is not defined, and adding a vector to a point results in a point.

Affine Transformation in Homogeneous Coordinates

Affine transformations can be rewritten as (typically performing linear transformation first, then translation):

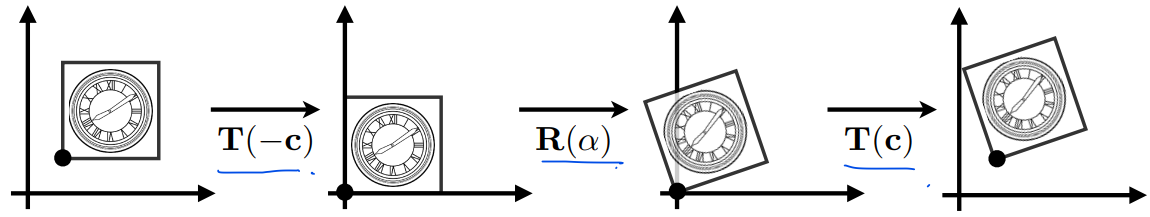

Example: Rotating Around a Given Point

Let's look at an example of how to rotate around a given point in a unified way:

- : Translate to the origin

- : Rotate by angle

- : Translate back to the original point

The entire process can be written as:

NOTE

- Matrices do not have a commutative property; the order of matrix operations is important.

- Matrices have an associative property.

2. 3D Transformation

Similarly, homogeneous coordinates can be used to represent:

- 3D point =

- 3D vector =

- () is extended to be equivalent to

Affine transformations can be written as (still applying the linear transformation first, then translation):

Components:

Scaling:

Translation:

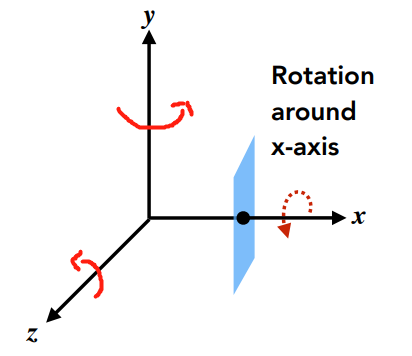

Rotation around the x-, y-, and z-axes:

Rotation about the x-axis:

Rotation about the y-axis:

Rotation about the z-axis:

3D rotation can be decomposed into sequential rotations about the x-, y-, and z-axes:

where are known as Euler angles.

Rodrigues' Rotation Formula

In 3D space, a vector rotating around an arbitrary axis by an angle results in the transformed vector . The corresponding rotation matrix is given by:

where is the identity matrix.

Proof Omitted

3. Viewing Transformation

viewing transformation

- view transformation or camera transformation

- projection transformation

- orthographic projection

- perspective projection

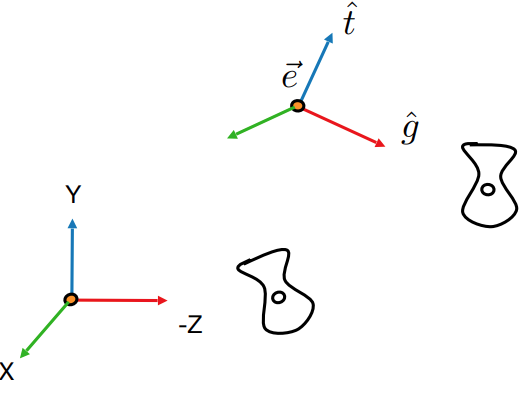

3.1 View Transformation

Understanding these parameters is analogous to adjusting the rotation of a photograph. We assume , but the camera's upward direction is conventionally defined by humans. Establishing an orthonormal basis can help avoid distortions during view transformations.

Understanding these parameters is analogous to adjusting the rotation of a photograph. We assume , but the camera's upward direction is conventionally defined by humans. Establishing an orthonormal basis can help avoid distortions during view transformations.If we maintain the relative positions of the camera and objects while translating both, the objects appear unchanged to the camera. Naturally, we want to move the camera to a fixed position, commonly known as the look-at point, where the look-at direction aligns with the negative z-axis, and the up direction corresponds to the y-axis.

This process is called view transformation or camera transformation, denoted as . Specifically:

First, we perform the translation transformation, where , and subscripts like and follow similar notation:

Next, we apply the rotation transformation, aligning , , and with the negative z-axis, y-axis, and x-axis, respectively. The inverse rotation matrix is given by:

Since rotation matrices are orthogonal, we obtain:

By substituting these into the equation, we obtain the camera transformation .

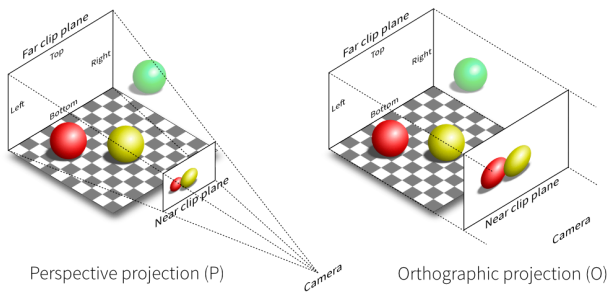

3.2 Projection Transformation

Now that the camera and objects are positioned correctly, the objects need to be projected from 3D to a 2D plane. There are two types of projection:

- Orthographic projection

- Perspective projection

However, the projection transformation introduced below actually maps a cuboid into a space.

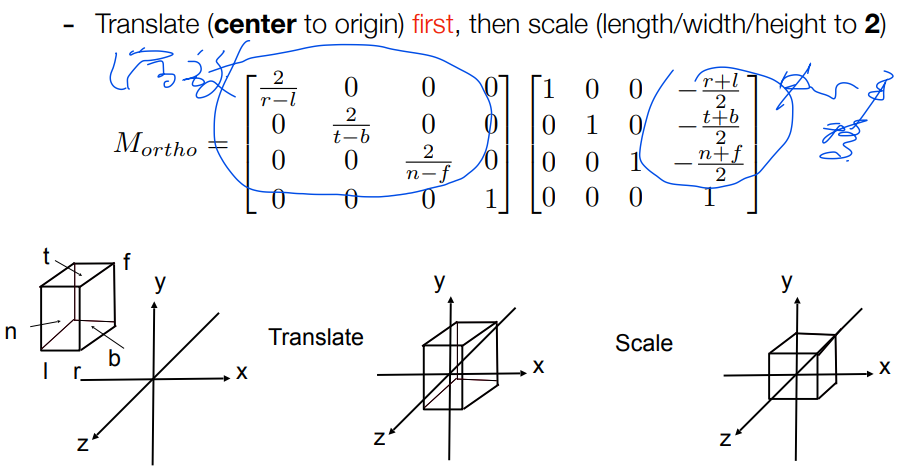

3.2.1 Orthographic Projection

Simplified Understanding of Orthographic Projection:

- The camera is at the origin, looking along the negative z-axis, with the up direction aligned with the y-axis.

- Drop off the z-axis.

- The object is translated and scaled into the range , meaning both the x- and y-coordinates are confined between and .

Common Approach:

Consider a cuboid defined by the ranges . This cuboid is transformed into the canonical cube , which means:

When using a right-handed coordinate system:

When using a right-handed coordinate system:- The left side has a smaller x value than the right.

- The bottom has a smaller y value than the top.

- However, the front has a larger z value than the back.

This is why OpenGL uses a left-handed coordinate system, where the depth axis (z) is more intuitive for rendering.

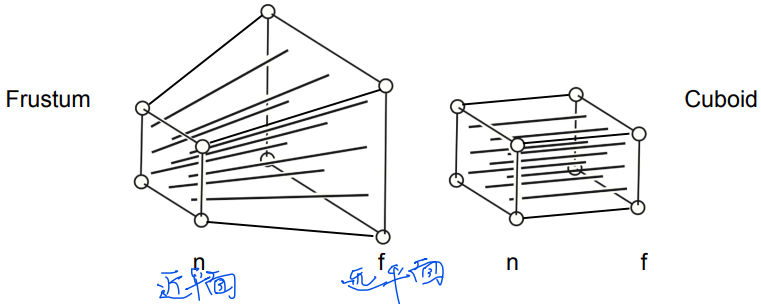

3.2.2 Perspective Projection

Steps for Perspective Projection:

Steps for Perspective Projection:: Transforms the frustum into a cuboid by "squeezing" it, ensuring that any point on the near plane remains unchanged, the z-coordinate on the far plane remains unchanged. while modifying the z-coordinates of intermediate planes.

: Applies orthographic projection, as discussed in section 3.2.1.

Finally, the perspective projection matrix is:

which gives the final result.

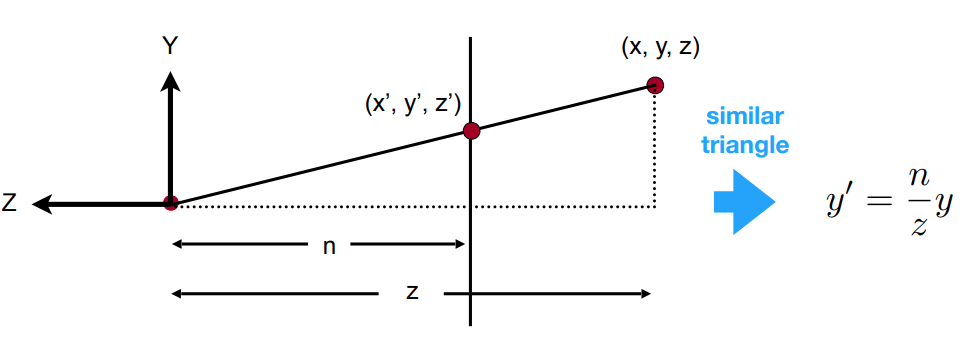

Derivation of

Consider an arbitrary point in the frustum. After transformation by , it is scaled into the form (which follows from similar triangles):

Thus, the transformation matrix takes the form:

Since a point on the near plane remains unchanged and the z-coordinate on the far plane is preserved, we have:

Solution:

From this, we derive the perspective-to-orthographic transformation matrix:

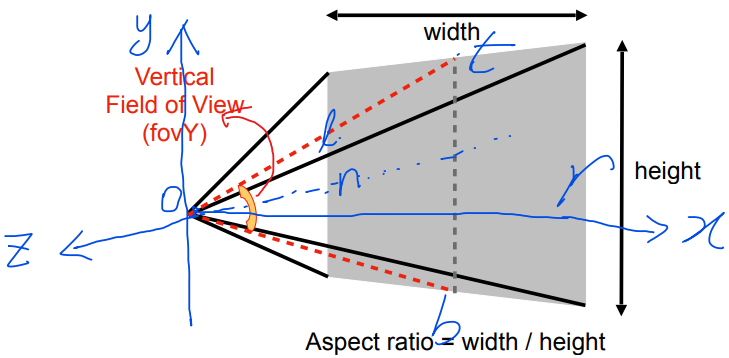

Computing Using and Aspect Ratio

Previously, the near plane was defined using:

- (x-coordinate of the left edge),

- (x-coordinate of the right edge),

- (y-coordinate of the bottom edge),

- (y-coordinate of the top edge).

Clearly, we have:

Clearly, we have:05~06 Rasterization

After the projection transformation, the object has been translated and scaled into the space.

Now, to render the object onto the screen, we perform rasterization.

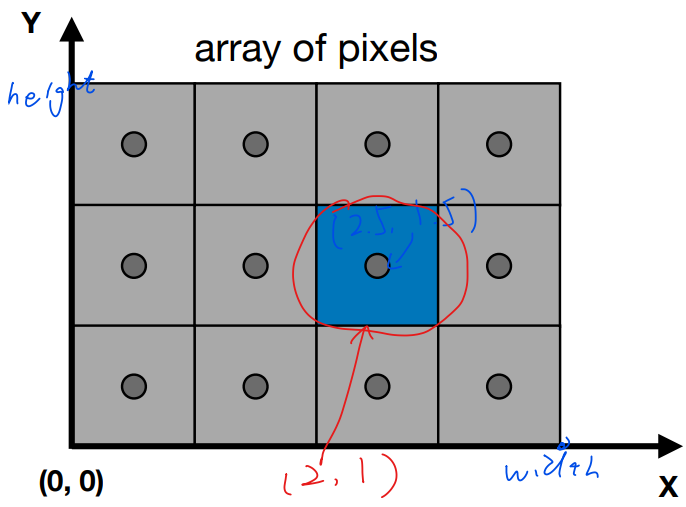

1. Defining the screen space

- Screen range: to

- Pixels are represented by integers , e.g., the pixel at in the red circle.

- Pixel range: to

- The center of a pixel is located at .

2. Viewport Transformation

Given that the object has already been translated and scaled into the space, we now want to translate and scale it into the screen range , while discarding the z-axis.

This process is known as the viewport transformation

The transformation matrix is straightforward to derive:

3. Rasterization

A triangle mesh is a widely used and effective method for representing objects. The advantages of choosing triangles include:

- The most fundamental polygon: Any other polygon can be decomposed into triangles.

- Always planar: A triangle is always a flat surface.

- Well-defined inside and outside: It has a clear distinction between the interior and exterior.

- Good interpolation properties: Enables smooth gradients and shading effects.

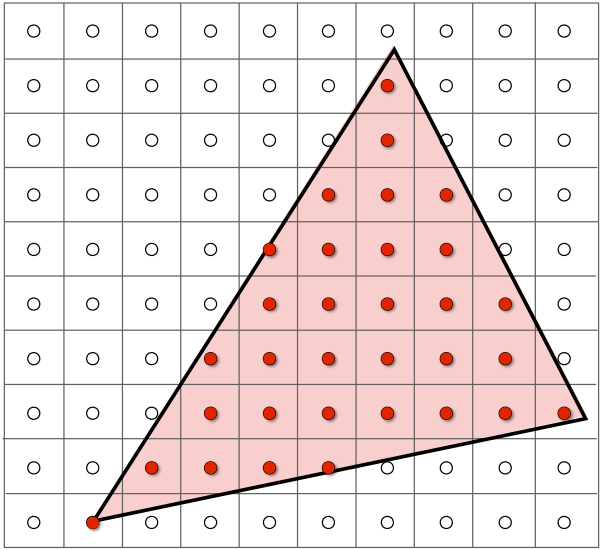

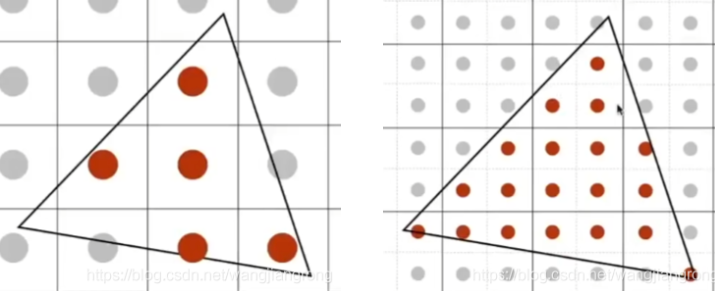

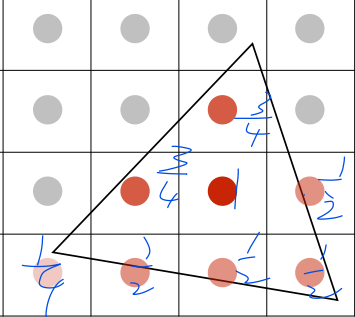

How to Represent a Triangle Using Pixels?

The approach is to sample and check whether each pixel center lies inside the triangle.

for (int x = 0; x < xmax; x++)

for (int y = 0; y < ymax; y++)

image[x][y] = inside(tri, x + 0.5, y + 0.5);

// inside function: returns 1 if inside the triangle, otherwise 0

NOTE

- How to determine whether a point is inside a triangle? Use cross products (as explained in Chapter 2).

- What if the center point is on the edge? This is user-defined and can vary based on implementation needs.

- Optimizations: Instead of iterating over the entire screen, bounding boxes can be used to enclose the triangle, limiting the pixels that need to be checked.

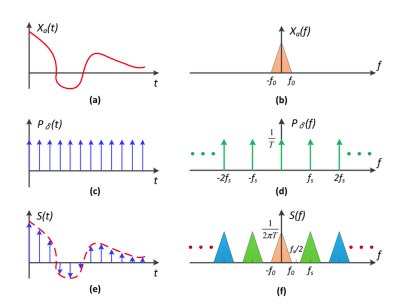

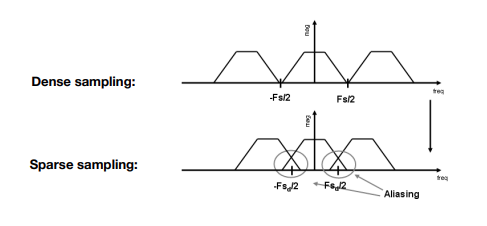

4. Sampling Artifacts

Common sampling artifacts include:

Jaggies (锯齿):

Caused by insufficient resolution, leading to visible stair-step edges on diagonal lines.Moire Pattern (摩尔纹):

Occurs when sampling frequency is too low relative to the image details.

Example: Removing odd-numbered rows/columns from an image and reconstructing it.Wagon Wheel Effect (车轮效应):

An illusion where wheels appear to rotate backward due to mismatched frame rates and object movement.Other Artifacts:

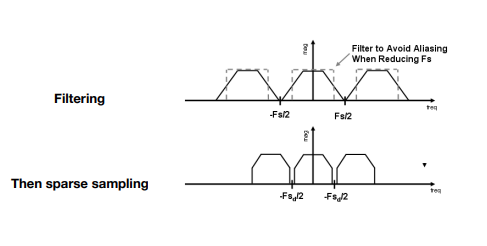

Sampling artifacts are also known as aliasing.

Sampling artifacts are also known as aliasing.This occurs when the signal changes too quickly, but the sampling rate is too low, leading to distortions.

Explaination

Aliasing = Mixed Frequency Contents

Aliasing = Mixed Frequency Contents

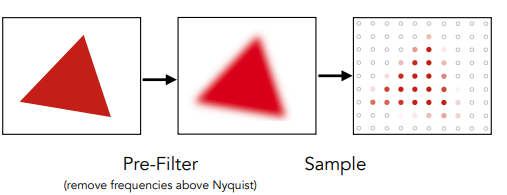

5. Anti-aliasing

MSAA

07~09 Shading

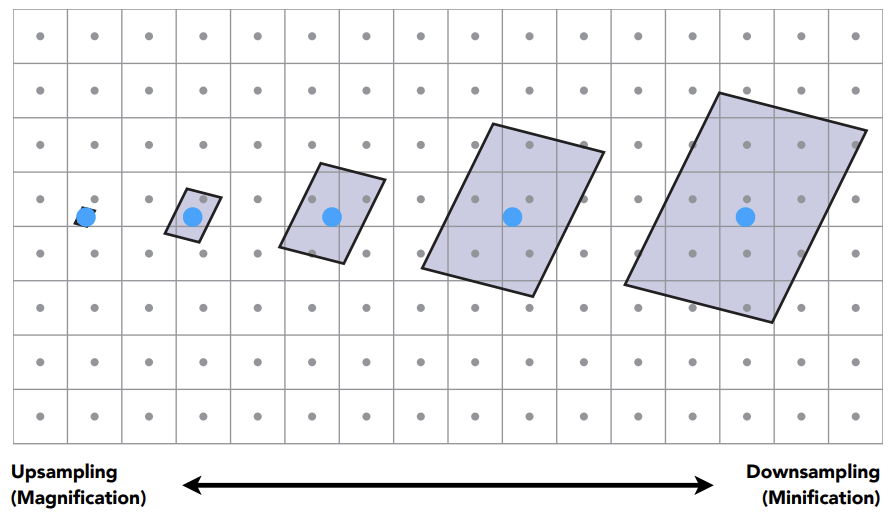

8. Texture Magnification/Minification

When applying textures, several issues may arise:

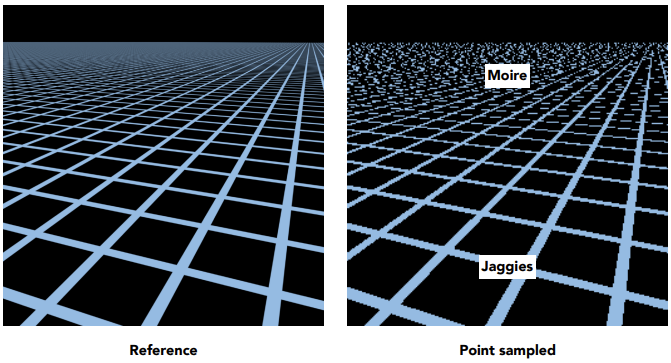

Texture Magnification:

- Occurs when the texture resolution is too low.

- Multiple screen pixels map to the same (u,v) coordinate, leading to blocky artifacts.

- This results in unnatural transitions at boundaries, forming visible pixelated grids (see Figure 8.1, Nearest).

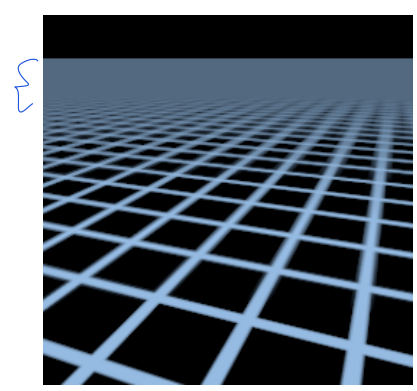

Texture Minification:

- Occurs when the texture resolution is too high.

- A single pixel on the screen maps to multiple (u,v) coordinates.

- This causes aliasing artifacts, as fine texture details cannot be properly represented (see Figure 8.2).

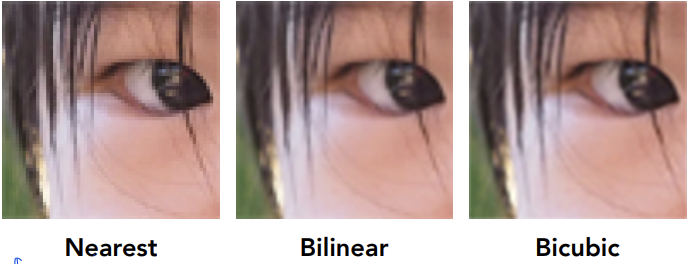

8.1 Texture Magnification

Nearest Neighbor Filtering: Simply selects the nearest texel for each pixel. Fast but produces blocky artifacts, especially when magnified.

Nearest Neighbor Filtering: Simply selects the nearest texel for each pixel. Fast but produces blocky artifacts, especially when magnified.

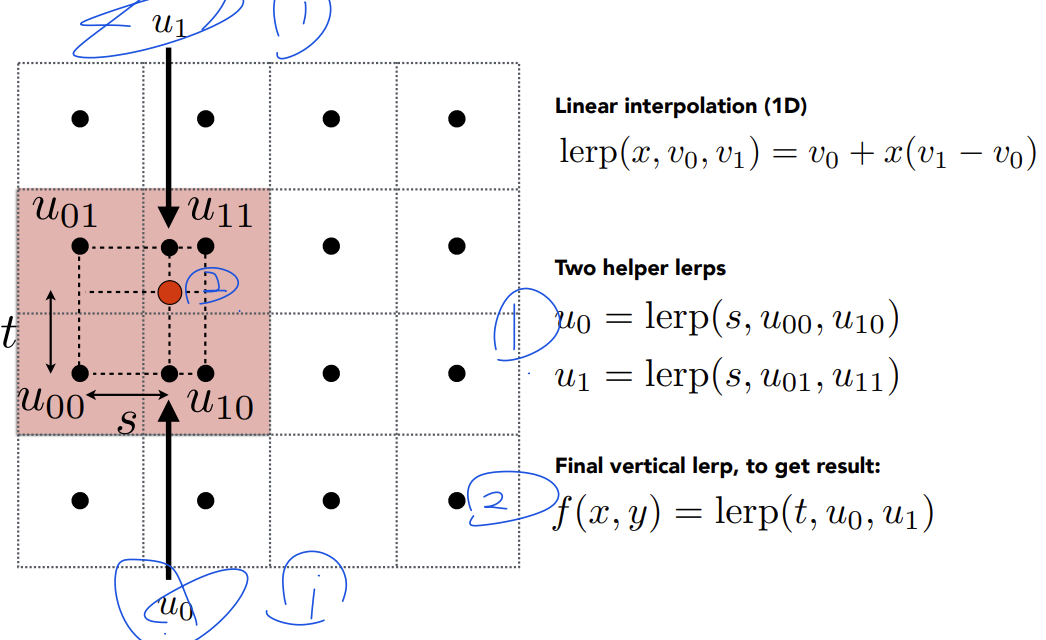

Bilinear Filtering:

- Selects the four nearest texels.

- Performs two passes of linear interpolation to compute the final color.

8.2 Texture Minification

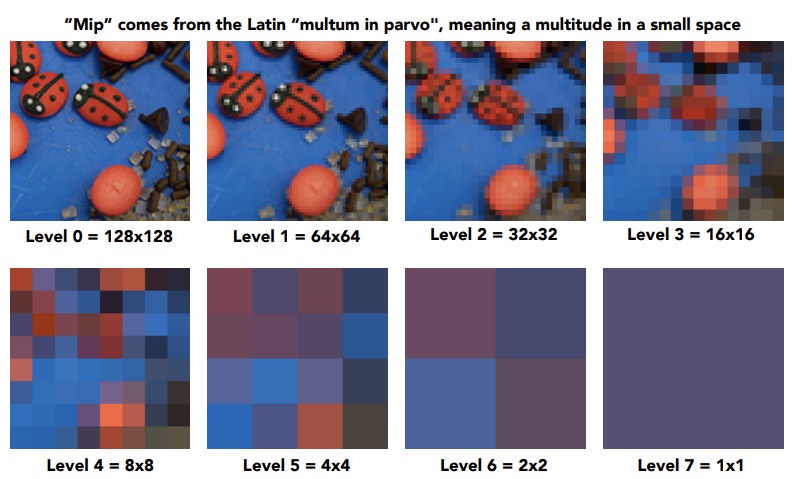

Supersampling can be used to solve aliasing issues, but it is computationally expensive. The following introduces the concept of Mipmap, which allows fast, approximate, and square-shaped range queries—providing an immediate average (or maximum, minimum, etc.) value within a region.

Supersampling can be used to solve aliasing issues, but it is computationally expensive. The following introduces the concept of Mipmap, which allows fast, approximate, and square-shaped range queries—providing an immediate average (or maximum, minimum, etc.) value within a region.Using the original texture, a mipmap is generated through preprocessing. The additional storage required is only one-third of the original image, making it efficient in terms of memory usage.

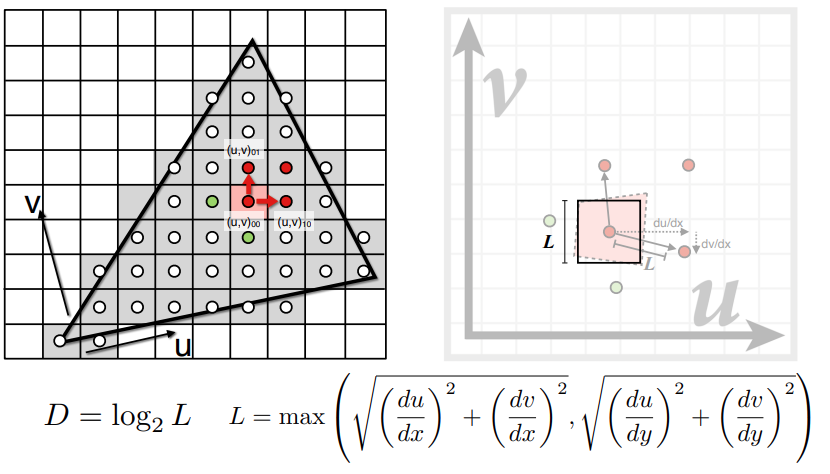

Screen pixels only need to sample the corresponding level of texels. But how do we compute the level ?

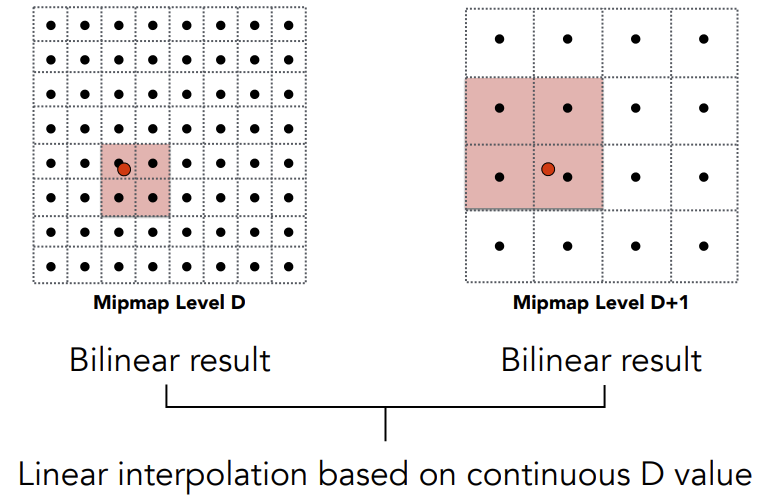

Screen pixels only need to sample the corresponding level of texels. But how do we compute the level ? Suppose we calculate a level of 1.8. Trilinear interpolation is required to obtain the final value for the screen pixel, which involves performing two bilinear interpolations followed by a linear interpolation.

Suppose we calculate a level of 1.8. Trilinear interpolation is required to obtain the final value for the screen pixel, which involves performing two bilinear interpolations followed by a linear interpolation.

To address this issue, anisotropic filtering and EWA filtering can be used. These methods generate different texture maps that support range queries beyond just square regions.

To address this issue, anisotropic filtering and EWA filtering can be used. These methods generate different texture maps that support range queries beyond just square regions.10~12 Geometry

13~16 Ray Tracing

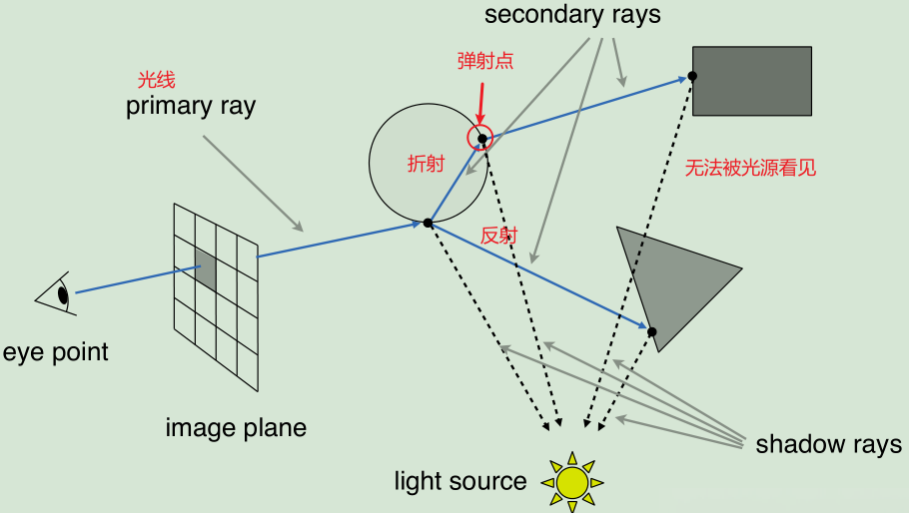

1. Recursive (Whitted-Style) Ray Tracing

Let's first learn about a traditional ray tracing algorithm known as Whitted-style ray tracing.

This method differs from conventional shading models in several key ways:

- Light Interaction: Unlike standard shading, this method assumes that light rays continue to reflect and refract after hitting an object.

- Shading Process: Each time reflection or refraction occurs (a bounce point), shading is computed, provided the point is not in shadow.

As shown in the diagram, this recursive process allows for realistic effects such as reflections, refractions, and shadows.

- Cast a ray from the viewpoint through the image plane and check for intersections with objects.

- Generate reflection and refraction rays upon intersection.

- Recursively trace the generated rays to compute lighting effects.

- Each bounce point is shaded based on its visibility to the light source.

- Combine all shading contributions using weighted summation to determine the final pixel color.

To facilitate further explanations, the course defines the following concepts:

To facilitate further explanations, the course defines the following concepts:- Primary Ray: The initial ray cast from the viewpoint that first intersects an object.

- Secondary Rays: Rays generated after bouncing off a surface, including reflection and refraction rays.

- Shadow Rays: Rays used to determine visibility between a point and a light source.

1.1 Ray-Surface Intersection

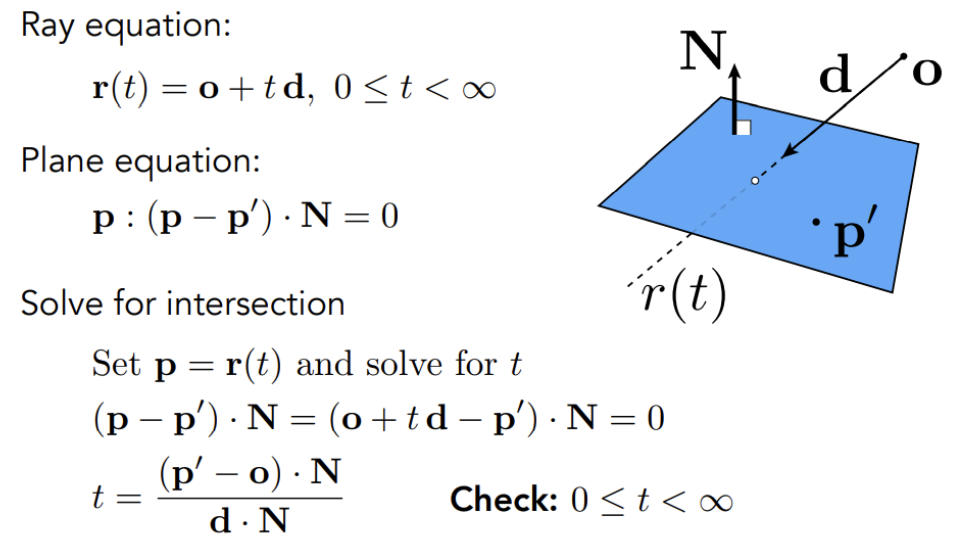

1.1.1 Ray Equation

Ray Definition

A ray can be defined by a point o and a direction vector d as:

1.1.2 Ray Intersection With Implicit Surface

As illustrated in the diagram:

Ray equation:

General implicit surface:

Substituting the ray equation:

Solve for real, positive roots to determine intersection points.

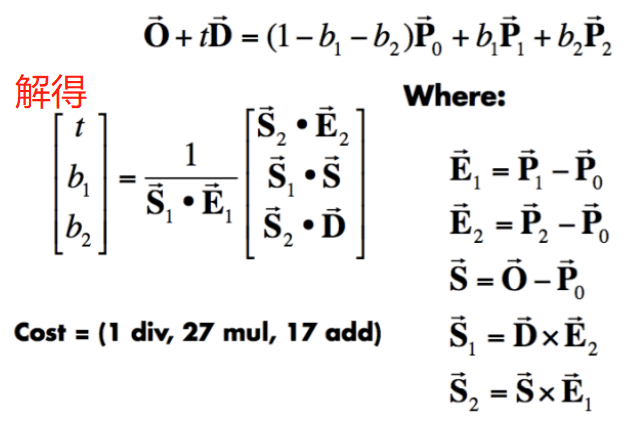

1.1.3 Ray Intersection With Triangle

Calculation Steps:

- Find the intersection between the ray and the plane containing the triangle.

- Check whether the intersection point lies inside the triangle.

- Solve for , , and using three equations with three unknowns.

- Check validity of the solution:

- The intersection must be along the ray direction ().

- The intersection point must be inside the triangle (, ).

1.2 Accelerating Ray-Surface Intersection

Instead of checking for intersections between the ray and every triangle, we first check if the ray intersects a bounding box that encloses the object.

- If the ray does not intersect the bounding volume, it definitely does not intersect the object.

- If the ray does intersect the bounding volume, then we proceed with a more detailed intersection test.

1.2.1 Bounding Volume

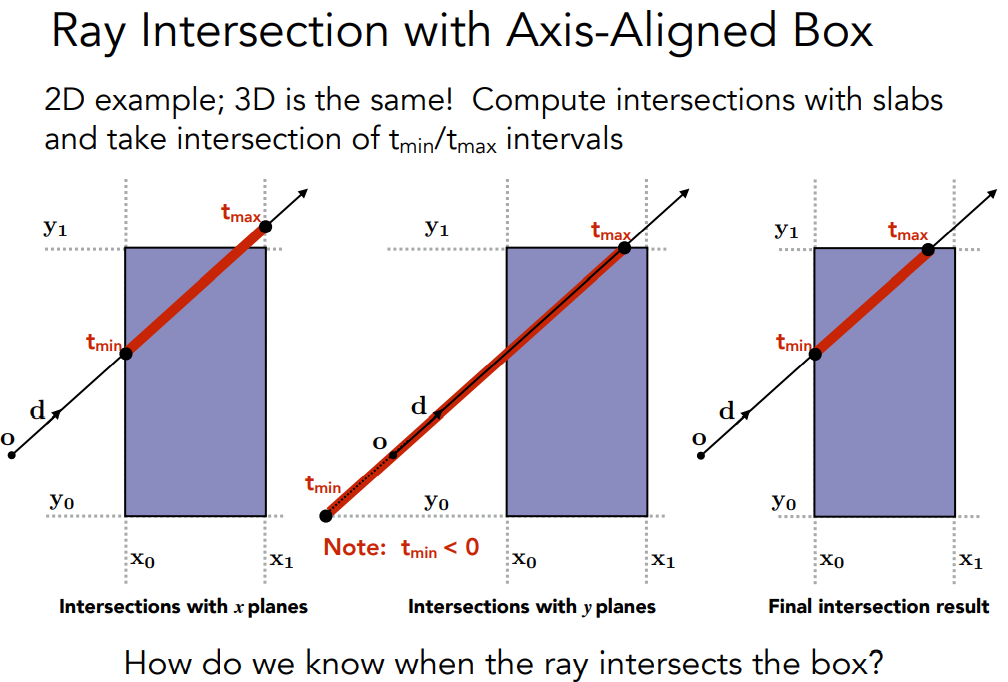

One commonly used bounding volume is the Axis-Aligned Bounding Box (AABB).

- An AABB is aligned with the coordinate axes, meaning its faces are parallel to the xOy, yOz, or zOx planes.

- During intersection testing, checking against an AABB first helps eliminate unnecessary intersection tests with complex geometry.

- If the ray intersects the AABB, we proceed with a more precise intersection test with the actual object.

Key Concepts for Ray-AABB Intersection

- The ray enters all slabs (pairs of parallel planes) before it is considered inside the bounding box.

- If the ray exits any slab, it is considered to have left the bounding box.

- An intersection occurs when .

- If , the box is behind the ray, meaning there is no valid intersection.

- If and , the ray starts inside the box and intersects it.

- For a 3D bounding box, the entry and exit times are computed as:

Conclusion: A ray intersects the AABB if and only if and .

(This algorithm is based on the Liang-Barsky algorithm.)